Nomad

Template Nomad jobspecs with Levant

Nomad Pack is a new package manager and templating tool that can be used instead of Levant. Nomad Pack is currently in Tech Preview and may change during development.

With Levant you can create and submit Nomad job specifications from template job specifications. These template job specifications reduce the overall amount of boilerplate that you have to manage for job files that contain a significant amount of repeated code.

In this tutorial, you iteratively modify a template for rendering with Levant with the goal of deploying a Zookeeper node while reducing the overall job specification size and increasing modularity of job elements.

Prerequisites

You need:

A Nomad cluster with:

Consul integrated

Docker installed and available as a task driver

a Nomad host volume named

zk1configured on a Nomad client agent to persist the Zookeeper data

You should be:

Familiar with Go's text/template syntax. You can learn more about it in the Learn Go Template Syntax tutorial.

Comfortable in your shell of choice, specifically adding executables to the path, editing text files, and managing directories.

Download Levant

Install Levant using the instructions found in the README of its GitHub

repository. Use one of the methods that provides you with a binary, rather than

the Docker image. Verify that you have installed it to your executable path by

running levant version.

$ levant version

Levant v0.3.0-dev (d7d77077+CHANGES)

Build the starting job file

Create a text file called zookeeper.nomad with the following content.

job "zookeeper" {

datacenters = ["dc1"]

type = "service"

update {

max_parallel = 1

}

group "zk1" {

volume "zk" {

type = "host"

read_only = false

source = "zk1"

}

count = 1

restart {

attempts = 10

interval = "5m"

delay = "25s"

mode = "delay"

}

network {

port "client" {

to = -1

}

port "peer" {

to = -1

}

port "election" {

to = -1

}

port "admin" {

to = 8080

}

}

service {

tags = ["client","zk1"]

name = "zookeeper"

port = "client"

meta { ZK_ID = "1" }

address_mode = "host"

}

service {

tags = ["peer"]

name = "zookeeper"

port = "peer"

meta { ZK_ID = "1" }

address_mode = "host"

}

service {

tags = ["election"]

name = "zookeeper"

port = "election"

meta { ZK_ID = "1" }

address_mode = "host"

}

service {

tags = ["zk1-admin"]

name = "zookeeper"

port = "admin"

meta { ZK_ID = "1" }

address_mode = "host"

}

task "zookeeper" {

driver = "docker"

template {

data=<<EOF

{{- $MY_ID := "1" -}}

{{- range $tag, $services := service "zookeeper" | byTag -}}

{{- range $services -}}

{{- $ID := split "-" .ID -}}

{{- $ALLOC := join "-" (slice $ID 0 (subtract 1 (len $ID ))) -}}

{{- if .ServiceMeta.ZK_ID -}}

{{- scratch.MapSet "allocs" $ALLOC $ALLOC -}}

{{- scratch.MapSet "tags" $tag $tag -}}

{{- scratch.MapSet $ALLOC "ZK_ID" .ServiceMeta.ZK_ID -}}

{{- scratch.MapSet $ALLOC (printf "%s_%s" $tag "address") .Address -}}

{{- scratch.MapSet $ALLOC (printf "%s_%s" $tag "port") .Port -}}

{{- end -}}

{{- end -}}

{{- end -}}

{{- range $ai, $a := scratch.MapValues "allocs" -}}

{{- $alloc := scratch.Get $a -}}

{{- with $alloc -}}

server.{{ .ZK_ID }} = {{ .peer_address }}:{{ .peer_port }}:{{ .election_port }};{{.client_port}}{{println ""}}

{{- end -}}

{{- end -}}

EOF

destination = "config/zoo.cfg.dynamic"

change_mode = "noop"

}

template {

destination = "config/zoo.cfg"

data = <<EOH

tickTime=2000

initLimit=30

syncLimit=2

reconfigEnabled=true

dynamicConfigFile=/config/zoo.cfg.dynamic

dataDir=/data

standaloneEnabled=false

quorumListenOnAllIPs=true

EOH

}

env {

ZOO_MY_ID = 1

}

volume_mount {

volume = "zk"

destination = "/data"

read_only = false

}

config {

image = "zookeeper:3.6.1"

ports = ["client","peer","election","admin"]

volumes = [

"config:/config",

"config/zoo.cfg:/conf/zoo.cfg"

]

}

resources {

cpu = 300

memory = 256

}

}

}

}

This job creates a single Zookeeper instance, with dynamically selected values for the ZK client, quorum, leader election, and admin ports. These ports are all registered in Consul. The Zookeeper configuration is written out as a simple template and the Zookeeper cluster member configuration is generated by the template stanza using the addresses and ports advertised in Consul.

Verify your configuration

Run this template in Nomad

Submit the job to the Nomad cluster.

$ nomad run zookeeper.nomad

==> Monitoring evaluation "498d1a25"

Evaluation triggered by job "zookeeper"

Allocation "b1111699" created: node "023f7896", group "zk1"

Evaluation within deployment: "f8dcf6f3"

Evaluation status changed: "pending" -> "complete"

==> Evaluation "498d1a25" finished with status "complete"

Verify Zookeeper

To verify your Zookeeper is up and responsive, connect to its admin port and

run the srvr four letter command.

Get Zookeeper's admin port

You can use one of several ways to get the Zookeeper admin port. Click on one of these techniques for more details.

$ dig @10.0.2.21 -p8600 SRV zk1-admin.zookeeper.service.consul

; <<>> DiG 9.10.6 <<>> @10.0.2.21 -p8600 SRV zk1-admin.zookeeper.service.consul

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 22461

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 2

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;zk1-admin.zookeeper.service.consul. IN SRV

;; ANSWER SECTION:

zk1-admin.zookeeper.service.consul. 0 IN SRV 1 1 26284 0a000233.addr.dc1.consul.

;; ADDITIONAL SECTION:

0a000233.addr.dc1.consul. 0 IN A 10.0.2.51

;; Query time: 54 msec

;; SERVER: 10.0.2.21#8600(10.0.2.21)

;; WHEN: Mon Nov 09 18:11:43 EST 2020

;; MSG SIZE rcvd: 123

This output indicates that the service is accessible at 10.0.2.51:26284

Run nomad job status zookeeper and make a note of the running allocation's ID.

$ nomad job status zookeeper

ID = zookeeper

Name = zookeeper

Submit Date = 2020-11-09T17:40:17-05:00

Type = service

Priority = 50

Datacenters = dc1

Namespace = default

Status = running

Periodic = false

Parameterized = false

Summary

Task Group Queued Starting Running Failed Complete Lost

zk1 0 0 1 0 0 0

Latest Deployment

ID = f8dcf6f3

Status = successful

Description = Deployment completed successfully

Deployed

Task Group Desired Placed Healthy Unhealthy Progress Deadline

zk1 1 1 1 0 2020-11-09T17:53:30-05:00

Allocations

ID Node ID Task Group Version Desired Status Created Modified

b1111699 023f7896 zk1 0 run running 26m40s ago 26m29s ago

In this case the ID is b1111699.

Run nomad alloc status and supply the allocation ID.

$ nomad alloc status b1111699

ID = b1111699-fb35-cd46-3dda-dae3233e2373

Eval ID = 498d1a25

Name = zookeeper.zk1[0]

Node ID = 023f7896

Node Name = nomad-client-1.node.consul

Job ID = zookeeper

Job Version = 0

Client Status = running

Client Description = Tasks are running

Desired Status = run

Desired Description = <none>

Created = 28m47s ago

Modified = 28m36s ago

Deployment ID = f8dcf6f3

Deployment Health = healthy

Allocation Addresses

Label Dynamic Address

*client yes 10.0.2.51:23977 -> 2181

*peer yes 10.0.2.51:26262 -> 26262

*election yes 10.0.2.51:22589 -> 22589

*admin yes 10.0.2.51:26284 -> 8080

Task "zookeeper" is "running"

...

Consult the Allocation Addresses section of the output. In this example, the admin address is at 10.0.2.51:26284.

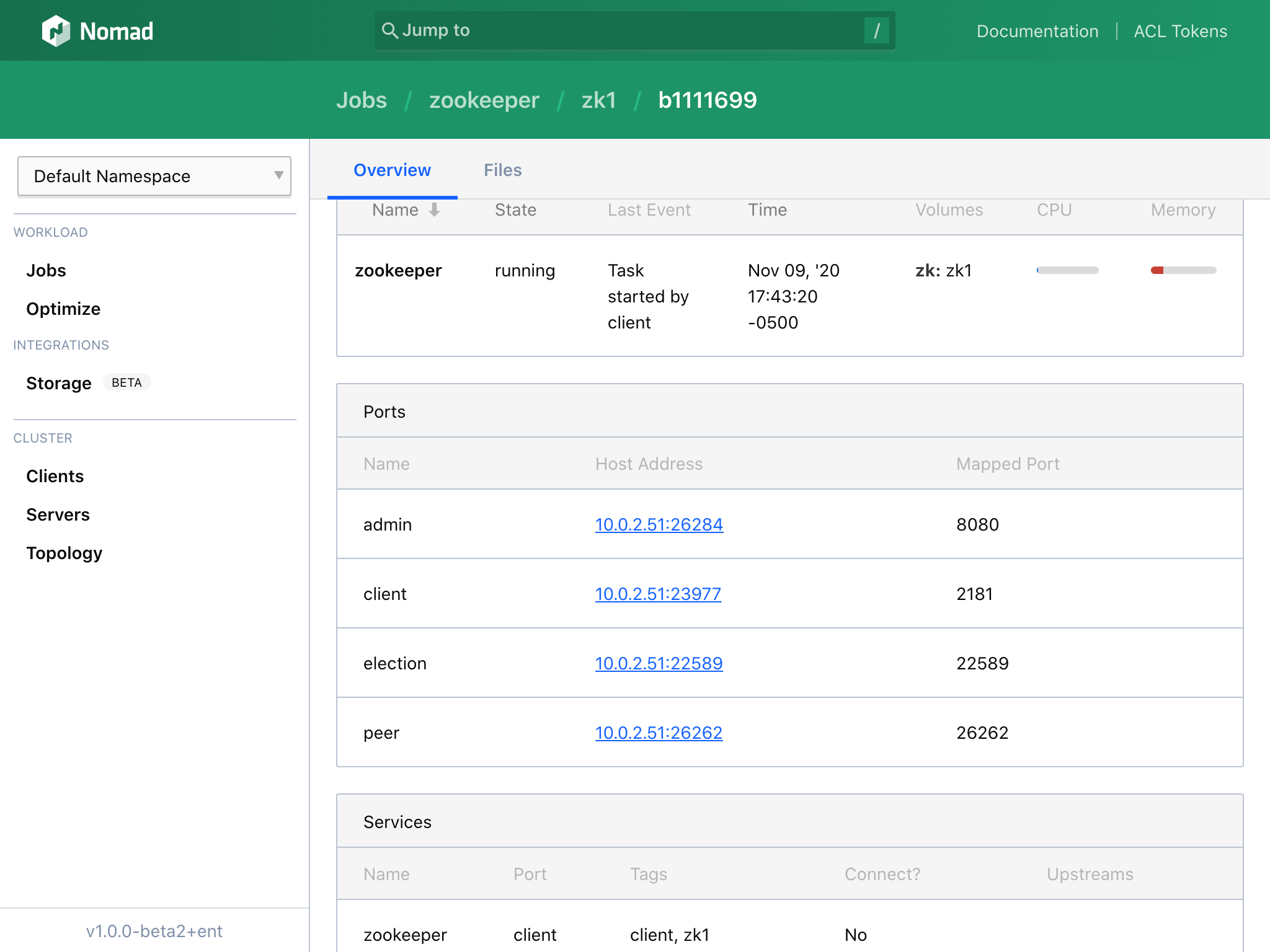

Open the Nomad UI in your browser. Select the running zookeeper job. From the job details screen, select the zk1 task group, select the running allocation's ID.

Once you are on the allocation detail page, you can get the port mappings by scrolling down to the Ports table.

Run srvr command via Zookeeper admin

Now that you have located the port that the admin server is running on, make a

request to run the srvr command. The following is an example using curl.

$ curl zk1-admin.zookeeper.service.consul:26284/commands/srvr

{

"version" : "3.6.1--104dcb3e3fb464b30c5186d229e00af9f332524b, built on 04/21/2020 15:01 GMT",

"read_only" : false,

"server_stats" : {

// ... more json ... //

}

}

Stop and purge the job

Run nomad stop -purge zookeeper to stop the Zookeeper instance. Using the

-purge flag while stopping reduces the amount of data you have to consult

if you have to debug a non-starting instance.

$ nomad stop -purge zookeeper

Make a template

Back up the original template by creating a copy named zookeeper.nomad.orig.

$ cp zookeeper.nomad zookeeper.nomad.orig

Optimize the template

Open zookeeper.nomad in a text editor. There is some file-like content that you might want to extract from the template for ease of maintenance, like the template used to generate the Zookeeper configuration in the zoo.cfg file. Also, notice that there are many repeated elements in the services and network ports. The following steps cover techniques for removing repeated or reusable material from your job specification.

Include zoo.cfg rather than inline it

One improvement involves making zoo.cfg external to the job template. For a lengthy configuration file, it's better to keep the template content separate from the rest of the configuration when possible. Navigate to the template stanza that creates the zoo.cfg file in the job.

template {

destination = "config/zoo.cfg"

data = <<EOH

tickTime=2000

initLimit=30

syncLimit=2

reconfigEnabled=true

dynamicConfigFile=/config/zoo.cfg.dynamic

dataDir=/data

standaloneEnabled=false

quorumListenOnAllIPs=true

EOH

}

Create a text file named zoo.cfg with the contents of the data element.

tickTime=2000

initLimit=30

syncLimit=2

reconfigEnabled=true

dynamicConfigFile=/config/zoo.cfg.dynamic

dataDir=/data

standaloneEnabled=false

quorumListenOnAllIPs=true

Remove this content from the data element and replace it with a call to Levant's

fileContents function. This loads the content from the zoo.cfg file and inserts

it in place of the action. Don't forget that Levant uses double square brackets

as its left and right delimiters for actions. Your template stanza should look

like the following.

template {

destination = "config/zoo.cfg"

data = <<EOH

[[ fileContents "zoo.cfg" ]]

EOH

}

Externalize the consul-template

Perform the same sort of change to the template stanza that builds the Zookeeper cluster membership.

template {

data=<<EOF

{{- $MY_ID := "1" -}}

{{- range $tag, $services := service "zookeeper" | byTag -}}

{{- range $services -}}

{{- $ID := split "-" .ID -}}

{{- $ALLOC := join "-" (slice $ID 0 (subtract 1 (len $ID ))) -}}

{{- if .ServiceMeta.ZK_ID -}}

{{- scratch.MapSet "allocs" $ALLOC $ALLOC -}}

{{- scratch.MapSet "tags" $tag $tag -}}

{{- scratch.MapSet $ALLOC "ZK_ID" .ServiceMeta.ZK_ID -}}

{{- scratch.MapSet $ALLOC (printf "%s_%s" $tag "address") .Address -}}

{{- scratch.MapSet $ALLOC (printf "%s_%s" $tag "port") .Port -}}

{{- end -}}

{{- end -}}

{{- end -}}

{{- range $ai, $a := scratch.MapValues "allocs" -}}

{{- $alloc := scratch.Get $a -}}

{{- with $alloc -}}

server.{{ .ZK_ID }} = {{ .peer_address }}:{{ .peer_port }}:{{ .election_port }};{{.client_port}}{{println ""}}

{{- end -}}

{{- end -}}

EOF

destination = "config/zoo.cfg.dynamic"

change_mode = "noop"

}

Create a file called template.go.tmpl with the contents of the template.

{{- range $tag, $services := service "zookeeper" | byTag -}}

{{- range $services -}}

{{- $ID := split "-" .ID -}}

{{- $ALLOC := join "-" (slice $ID 0 (subtract 1 (len $ID ))) -}}

{{- if .ServiceMeta.ZK_ID -}}

{{- scratch.MapSet "allocs" $ALLOC $ALLOC -}}

{{- scratch.MapSet "tags" $tag $tag -}}

{{- scratch.MapSet $ALLOC "ZK_ID" .ServiceMeta.ZK_ID -}}

{{- scratch.MapSet $ALLOC (printf "%s_%s" $tag "address") .Address -}}

{{- scratch.MapSet $ALLOC (printf "%s_%s" $tag "port") .Port -}}

{{- end -}}

{{- end -}}

{{- end -}}

{{- range $ai, $a := scratch.MapValues "allocs" -}}

{{- $alloc := scratch.Get $a -}}

{{- with $alloc -}}

server.{{ .ZK_ID }} = {{ .peer_address }}:{{ .peer_port }}:{{ .election_port }};{{.client_port}}{{println ""}}

{{- end -}}

{{- end -}}

Update the template stanza to use fileContents to import the template from file.

template {

data=<<EOF

[[fileContents "template.go.tmpl"]]

EOF

destination = "config/zoo.cfg.dynamic"

change_mode = "noop"

}

Validate your updates

Use the levant render command to validate that your updates to the job spec

are working as you expect.

$ levant render

Barring any errors, the template renders to the screen with the zoo.cfg and dynamic template content embedded in the rendered output.

Did you know? Levant automatically processes single files with a .nomad extension. Naming the backup zookeeper.nomad.orig prevented Levant from considering it when looking for a file to render.

Reduce boilerplate with iteration

Range over a list

Both the network stanza and the services contain configuration based on the

Zookeeper protocol. Start with the network stanza.

network {

port "client" {

to = -1

}

port "peer" {

to = -1

}

port "election" {

to = -1

}

port "admin" {

to = 8080

}

}

Create a list with values suitable to range over. Immediately before the network stanza and after the empty line, insert a line with this content.

[[- $Protocols := list "client" "peer" "election" "admin" ]]

This action creates a list with the protocol types named $Protocols. Note the

right delimiter does not suppress the whitespace. This allows the network

stanza to render in the correct place in the output. For outputs that do not

require strict placement—like HCL—you could chose to do less whitespace

management and end with functional, but perhaps less readable outputs.

Now, remove all of the content inside of the network stanza and replace it with

this template code that ranges over the list and creates the same configuration.

Recall that the range function iterates over each element in a list and allows

for saving the value into a variable along with the list index. Also, note the

use of [[- and -]] delimiters along with some actions ([[ "" ]]) that do

nothing to control whitespace.

[[- range $I, $Protocol := $Protocols -]]

[[- $to := -1]]

[[- if eq $Protocol "admin" -]]

[[- $to = 8080 -]]

[[- end ]]

[[- if ne $I 0 -]][[- println "" -]][[- end ]]

port "[[$Protocol]]" {

to = [[$to]]

}

[[- end ]]

Re-render your template by running the levant render command and ensuring that

your network stanza contains client, peer, and election ports with to

values set to -1; and it contains an admin port with a to value of 8080.

Services stanzas

Locate the service stanzas in the job specification and note the amount of

boilerplate. Use the same list to create corresponding service stanzas. The

goal being to replace the static list given in the job file with a template

driven version.

service {

tags = ["client","zk1"]

name = "zookeeper"

port = "client"

meta { ZK_ID = "1" }

address_mode = "host"

}

service {

tags = ["peer"]

name = "zookeeper"

port = "peer"

meta { ZK_ID = "1" }

address_mode = "host"

}

service {

tags = ["election"]

name = "zookeeper"

port = "election"

meta { ZK_ID = "1" }

address_mode = "host"

}

service {

tags = ["zk1-admin"]

name = "zookeeper"

port = "admin"

meta { ZK_ID = "1" }

address_mode = "host"

}

This time, try to create a template that preserves the whitespace. If you get stuck, you can refer to this solution.

Test your solution by rendering the template using the levant render

command.

Remove the existing service stanzas and replace them with the following

template. This solution builds a list and uses toJson to format it for output

as the tags value; however, you could also use printf to build a string to

output as the tags value

[[- range $I, $Protocol := $Protocols -]]

[[- $tags := list $Protocol -]]

[[ if eq $Protocol "client" ]][[- $tags = append $tags "zk1" -]][[- end -]]

[[ if eq $Protocol "admin" ]][[- $tags = list "zk1-admin" -]][[- end -]]

[[- println "" ]]

service {

tags = [[$tags | toJson]]

name = "zookeeper"

port = "[[$Protocol]]"

meta { ZK_ID = "1" }

address_mode = "host"

}

[[- end ]]

Submit the job with Levant

Levant also implements a Nomad client capable of submitting a rendered template directly to Nomad. Now that you have an equivalent CPU template, use Levant to submit it to Nomad.

Levant uses the NOMAD_ADDR environment variable or -address= flag to

determine where to submit the job. Set the NOMAD_ADDR environment variable to an

appropriate value for your environment.

Run the levant deploy command. Levant monitors the deployment and waits

until it is complete before returning to the command line.

$ levant deploy

2020-11-10T14:01:10-05:00 |INFO| levant/deploy: using dynamic count 1 for group zk1 job_id=zookeeper

2020-11-10T14:01:10-05:00 |INFO| levant/deploy: triggering a deployment job_id=zookeeper

2020-11-10T14:01:11-05:00 |INFO| levant/deploy: evaluation 275a575d-9cd7-c073-f58f-6ef91a31308f finished successfully job_id=zookeeper

2020-11-10T14:01:11-05:00 |INFO| levant/deploy: beginning deployment watcher for job job_id=zookeeper

2020-11-10T14:01:22-05:00 |INFO| levant/deploy: deployment 6208bcc2-cac3-e7b7-e66e-2350b05f920e has completed successfully job_id=zookeeper

2020-11-10T14:01:22-05:00 |INFO| levant/deploy: job deployment successful job_id=zookeeper

Validate that your Zookeeper node is running properly by using the steps presented earlier in this tutorial.

Learn more

In this tutorial, you reduced the size of a Nomad job file by approximately one- third by externalizing certain elements of the job specification. By externalizing these elements, you can:

- Make them reusable in other jobs

- Reduce the visual noise for editors who may not be familiar with Nomad

- Allow for tighter controls in source control due to more granular artifacts

- Enable the creation of abstract job templates

Next, consider doing the Make Abstract Job Specs with Levant tutorial since it continues with this job specification—enhancing it to be abstract, configurable via a JSON file, and deployed via Levant.

Clean up

To clean up, stop the Zookeeper job with nomad job stop zookeeper

$ nomad job stop zookeeper

Keep your files and client configuration if you are continuing to the Make Abstract Job Specs with Levant tutorial.

Otherwise, you can clean up the files you used in this tutorial by

removing the zookeeper.nomad.orig, zookeeper.nomad, zoo.cfg, and

template.include files from your machine.

Optionally, remove the zk1 host_volume from your client configuration and

delete the directory that backed it.